How MongoDB and LlamaIndex are solving the reliability crisis that's keeping autonomous AI from reaching production

The promise of autonomous AI agents is undeniable. Systems that can operate independently, learn continuously, and execute complex workflows without constant human oversight represent the next major leap in enterprise automation. Yet despite billions in investment and countless demos of impressive prototypes, most organizations struggle to deploy autonomous agents that work reliably in production.

The problem isn't that autonomous agents aren't powerful enough—it's that they aren't reliable enough.

Current agent implementations hit a reliability wall the moment they leave controlled environments. Token-driven loops drift unpredictably. Prompts degrade with each model update. Context windows become polluted with irrelevant information. State management fails across sessions. What works brilliantly in a demo breaks down when faced with the messy realities of enterprise environments.

This reliability crisis has created a fundamental tension in AI development: the more autonomous an agent becomes, the less predictable and controllable it appears to be. Many practitioners have responded by pulling back from autonomy entirely, choosing deterministic workflows over adaptive intelligence.

But this is a false choice. The future of autonomous agents doesn't require abandoning reliability—it requires building autonomy on a foundation of reliable infrastructure. When MongoDB's persistent state management converges with LlamaIndex's intelligent agent framework, autonomous agents can finally operate with both independence and consistency.

The Reliability Challenge: Why Current Agents Can't Reach Production

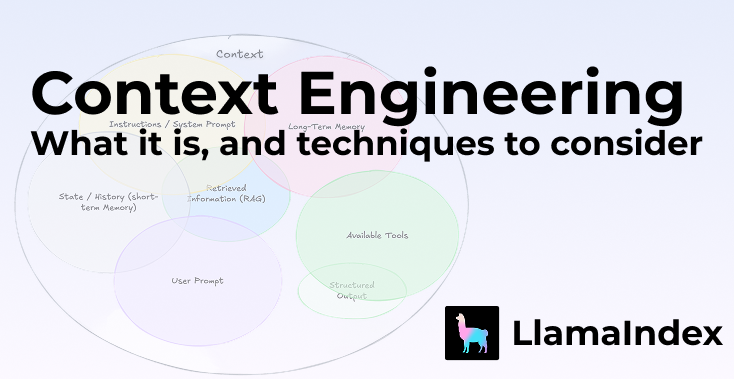

The disconnect between autonomous agent promises and production reality stems from four fundamental challenges: state management fragility, context pollution and drift, lack of deterministic control, and memory inconsistencies. To understand how these challenges manifest in practice—and how they can be solved—consider what reliable autonomous operation actually looks like.

Scenario: The Continuously Reliable Enterprise

The following scenario is a fictional illustration designed to demonstrate potential capabilities. Any similarity to real customers, users, or businesses is coincidental.

Imagine visiting a manufacturing facility in 2027 where autonomous agents have been managing complex production optimization for eighteen months—not eighteen days or weeks, but continuous, reliable operation across multiple economic cycles, supply chain disruptions, and operational changes.

Maria, the plant manager, walks through the facility knowing that her autonomous systems aren't just intelligent—they're dependably intelligent. This reliability didn't happen by accident. It emerged from solving the fundamental challenges that plague most autonomous agent implementations.

State Management That Actually Persists

When unexpected delays hit a key supplier last month, the agents didn't just react with standard contingency protocols. They drew from fourteen months of similar disruptions, cross-referenced current market conditions with historical patterns, and implemented a hybrid solution that combined lessons from previous supply shortages with real-time supplier negotiations. Unlike typical autonomous agents that treat each task as an isolated event, these systems maintain detailed state about every supplier relationship, every production optimization experiment, and every market condition they've encountered.

This persistent state management solves the fragility problem that causes most autonomous agents to lose critical context between interactions. When system restarts occur or sessions time out, the agents retain complete access to their accumulated knowledge and ongoing context—enabling the kind of compound intelligence that sustained autonomous operation requires.

Intelligent Context Without the Noise

The autonomous systems don't just store everything—they intelligently organize memory that gets more valuable over time. When a new type of production challenge emerges, the agents don't start from scratch or get overwhelmed by irrelevant historical data. They intelligently retrieve relevant patterns from months of accumulated experience, apply proven strategies with situational modifications, and update their understanding based on outcomes.

This approach eliminates the context pollution that plagues current retrieval systems, where agents become overwhelmed by irrelevant information or lose decision-making quality due to noisy conversation histories. Instead of simple vector similarity searches that surface irrelevant context, the system learns which types of historical information prove valuable in different situations.

Deterministic Control Within Autonomous Operation

Six months ago, when a critical piece of equipment failed during peak production, the autonomous systems didn't just manage the immediate crisis—they initiated a complex recovery process that involved supplier negotiations, production rescheduling, maintenance prioritization, and customer communication. The entire response was autonomous, yet auditable and consistent with established business processes.

This demonstrates how reliable infrastructure enables autonomous agents to operate within well-defined boundaries while maintaining the transparency and control that production environments require. Rather than becoming black boxes that make opaque decisions, these agents provide comprehensive observability into their decision-making processes.

Memory That Learns and Improves

What makes this scenario remarkable isn't the sophistication of individual decisions—it's the compound reliability that emerges from persistent, consistent operation. Each challenge becomes institutional knowledge rather than isolated problem-solving. The agents benefit from constantly improving memory access that becomes more precise over time, developing an increasingly sophisticated understanding of relevance that goes far beyond simple pattern matching.

The key breakthrough is reliability at scale. These agents operate autonomously not because they're perfect, but because their reliable infrastructure ensures consistent access to relevant historical context, predictable state management across system restarts, and intelligent memory consolidation that preserves valuable insights while discarding outdated information.

The Reliable Infrastructure: How MongoDB + LlamaIndex Enable Dependable Autonomy

These scenarios become possible only when autonomous agents operate on infrastructure designed for reliability, not just intelligence. The convergence of MongoDB's persistent state management with LlamaIndex's intelligent agent framework creates the foundational layer that autonomous agents need for sustained, dependable operation.

Persistent State That Survives Everything

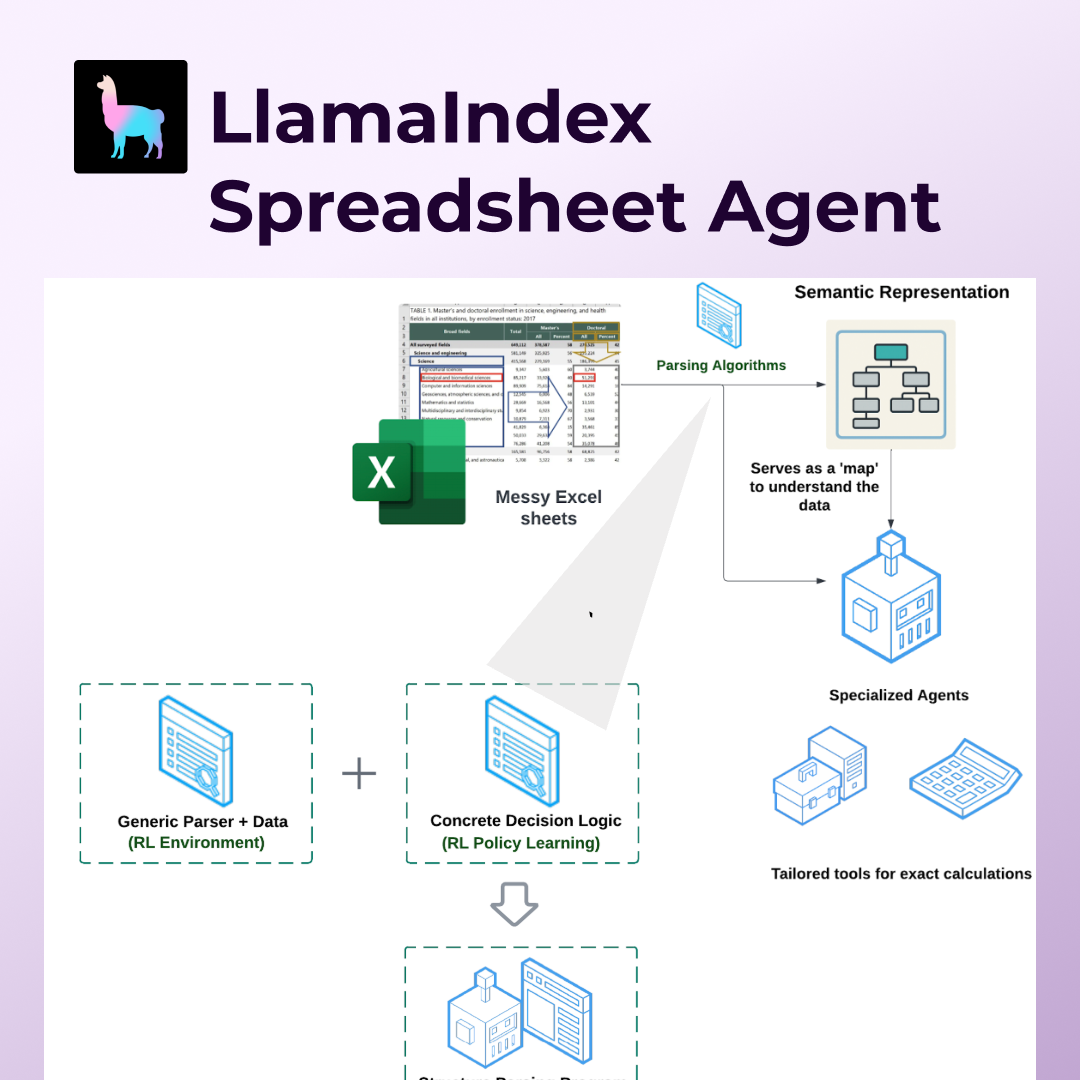

MongoDB's document-oriented architecture naturally accommodates the complex, evolving state that autonomous agents generate. Unlike traditional databases optimized for structured transactions, autonomous agents require storage that can persist decision trees, learning patterns, relationship maps, and contextual metadata that grows organically with agent capabilities.

More critically, MongoDB ensures this state remains accessible and consistent across system failures, updates, and scaling events. When an autonomous agent resumes operation after planned maintenance or unexpected outages, it retains complete access to its accumulated knowledge and ongoing context. This persistence enables the kind of long-term autonomous operation that compound intelligence requires.

The database's flexible schema handles the reality that autonomous agents generate unpredictable types of state information. As agents develop new capabilities or encounter novel situations, their state storage can evolve without requiring schema migrations or system redesign.

Intelligent Memory That Reduces Noise

LlamaIndex's retrieval systems solve the context pollution problem that plagues current autonomous implementations. Rather than overwhelming agents with every potentially relevant piece of historical information, LlamaIndex enables intelligent context selection that improves decision quality while maintaining performance.

The system learns which types of historical context prove valuable in different situations, developing an increasingly sophisticated understanding of relevance that goes far beyond simple vector similarity. This learning happens continuously, so agents benefit from constantly improving memory access that becomes more precise over time.

Most importantly, LlamaIndex's retrieval intelligence scales with agent capability. As autonomous agents become more sophisticated, their memory requirements become more complex. LlamaIndex ensures that increased memory doesn't create decreased performance or reliability.

Deterministic Foundations for Adaptive Intelligence

The MongoDB + LlamaIndex combination enables a hybrid approach that combines deterministic reliability with adaptive intelligence. Critical business processes can be implemented with predictable, auditable workflows, while autonomous agents provide adaptive optimization within well-defined boundaries.

LlamaIndex's Workflows abstraction exemplifies this balanced approach in practice. Rather than forcing developers to choose between rigid automation and unpredictable autonomy, Workflows enables event-driven, step-based execution that combines deterministic control where reliability matters most with autonomous decision-making where adaptability adds value. For example, a MongoDB-powered agent handling customer support can use deterministic workflows for compliance-critical steps like data validation and escalation procedures, while employing autonomous reasoning for response generation and problem-solving strategies.

This event-driven architecture allows complex agent behaviors to emerge from simple, auditable components. A workflow might deterministically validate incoming requests, autonomously analyze the problem using retrieval-augmented reasoning, deterministically check business rules, and then autonomously craft responses—all while maintaining complete observability into each decision point. When unexpected situations arise, the workflow can loop back through reflection patterns or branch into specialized handling procedures, with each step clearly defined and MongoDB providing persistent state across the entire process.

This architecture allows organizations to gradually expand autonomous agent capabilities without sacrificing the control and predictability required for production environments. Agents can demonstrate reliable autonomous behavior in constrained domains before being granted broader operational scope. Because Workflows break complex behaviors into discrete steps, developers can incrementally replace deterministic components with autonomous ones as confidence in agent reliability grows.

The infrastructure provides comprehensive observability into autonomous agent behavior, enabling developers to understand agent decision-making processes, identify areas for improvement, and maintain system reliability as autonomous capabilities expand. MongoDB's persistent state management ensures that workflow execution history, decision rationales, and performance patterns remain accessible for analysis and debugging, even as workflows evolve and scale.

Production-Ready Scalability

Both MongoDB and LlamaIndex are designed for enterprise-scale deployment with the reliability guarantees that production autonomous agents require. This isn't research infrastructure that needs to be replaced when moving from prototype to production—it's the foundation for autonomous agents that operate reliably at scale from day one.

The combined platform handles the complex data management requirements that emerge when multiple autonomous agents operate simultaneously, share state, and coordinate activities. This enables the collaborative intelligence scenarios that represent the most transformative applications of autonomous agent technology.

Building the Reliable Foundation Today

Organizations that recognize the infrastructure requirements for reliable autonomous agents and begin building on persistent, intelligent foundations today will be positioned to deploy genuinely autonomous systems as the technology matures.

Real-World Validation: The Cemex Story

This approach isn't theoretical—it's being proven in production by organizations like Cemex, the global building materials leader. When Cemex's data science team began building AI agents for their commercial operations, they faced the exact reliability challenges that plague most autonomous agent implementations: fragmented workflows where every new agent started from scratch, manual processes that stretched simple projects to three weeks, and inconsistent results from unstructured document processing.

Rather than pursuing maximum autonomy, Cemex focused on building reliable infrastructure foundations using MongoDB for persistent state management and LlamaIndex for intelligent document processing and retrieval. The results validate our reliability-first approach: development cycles dropped from three weeks to less than one day, answer quality improved dramatically as noted by users, and the team could finally prioritize new business requests instead of wrestling with infrastructure challenges. As Daniel Zapata, Cemex's Principal Data Scientist, puts it: "Start with the business problem, not the tech. Choose a framework with an active community that drops cleanly into your stack. That shortcut matters." Cemex's success demonstrates that reliable infrastructure doesn't constrain autonomous agent capabilities—it enables them to scale.

Start with Reliability Requirements: Rather than maximizing autonomous capabilities, focus on building infrastructure that can reliably support whatever level of autonomy your applications require. Autonomous agents with consistent, predictable behavior at limited scope are more valuable than highly capable agents that work unpredictably.

Design for Persistent Intelligence: Build memory and state management systems that improve agent performance over time rather than treating each interaction as isolated. The compound benefits of reliable memory infrastructure become more valuable as agents operate longer and encounter more diverse situations.

Balance Autonomy with Control: Implement autonomous capabilities within frameworks that maintain deterministic control over critical business processes. The goal isn't maximum autonomy—it's reliable autonomy that enhances rather than replaces human oversight and business process integrity.

Invest in Observable Infrastructure: Deploy autonomous agents with comprehensive monitoring, logging, and debugging capabilities from the beginning. Reliable autonomous operation requires understanding agent behavior patterns and continuously refining system performance.

The partnership between MongoDB and LlamaIndex represents the kind of infrastructure convergence required for the next phase of autonomous agent development. By solving the reliability challenges that currently prevent autonomous agents from reaching production, this collaboration enables organizations to move beyond impressive demos toward autonomous systems that deliver sustained business value.

The future of autonomous agents isn't about choosing between intelligence and reliability. It's about building intelligence on foundations reliable enough to support truly autonomous operation. That future is beginning now.