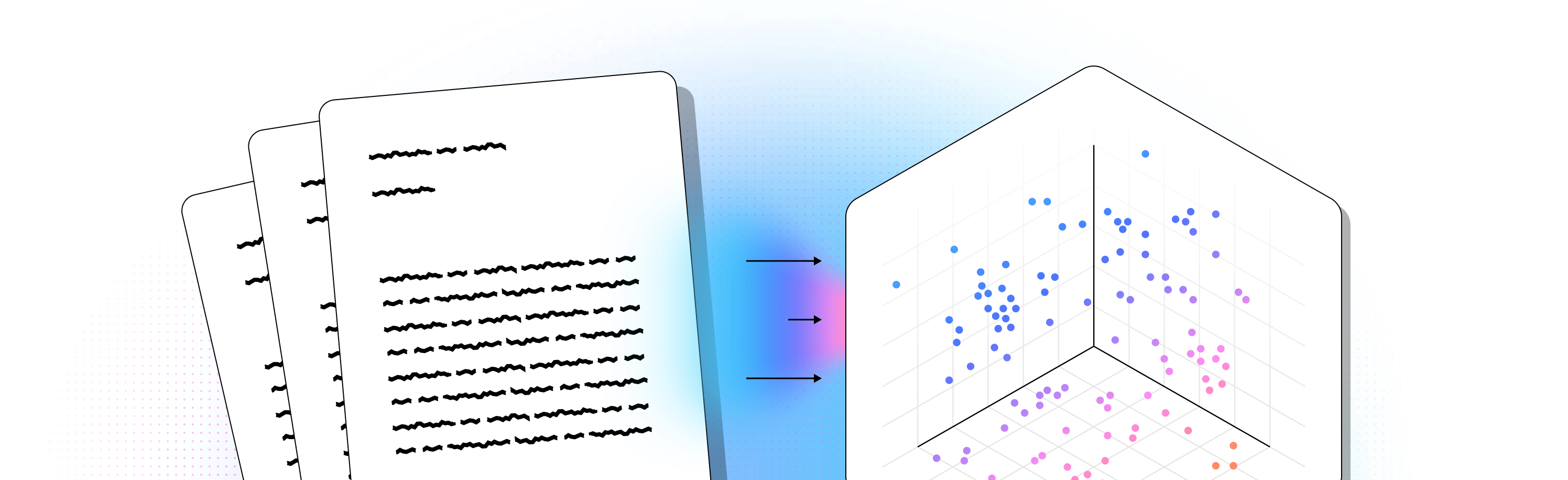

Power agents with searchable knowledge bases

LlamaCloud Index makes your data ready for retrieval with intelligent chunking and embedding to ensure accuracy at scale

-

500M+

Documents processed

-

300k+

LlamaCloud users

Why Index

Ensure data is ready for high-quality retrieval

Durable data pipelines

Leverage connectors to popular data sources and destinations with incremental syncs to keep data fresh

Embedding model choice

Flexibly define your favorite embedding model or use our recommended settings

Advanced retrieval

Ensure high-accuracy and context relevance for an efficient and accurate knowledge system

Multimodal indexing

Index text and images for high accuracy retrieval across modalities

Test and evaluate

Test and evaluate you index with OTEL and other integrations

Context-aware agents

Use indexed data for downstream workflow automation with AI agents

Designed for developers. Documented for clarity

Go even further with workflow automation

Read moreTrusted by leading AI builders and enterprise teams

We’ve helped leading teams go from prototype to production with real-world results.

Testimonials

Leading our organization's internal RAG assistant development, LlamaIndex’s rich ecosystem of connectors and thoughtful abstractions has enabled us to rapidly iterate on complex data pipelines and LLM-powered solutions. What truly sets LlamaIndex apart is their team's ability to rapidly integrate cutting-edge research and embrace community contributions, enabling us to continuously experiment with state-of-the-art approaches. Their recent focus on Workflows demonstrates their forward-thinking approach, helping us stay at the forefront of generative AI innovation.

LlamaCloud's capabilities have played a significant role in helping standardize the development of enterprise knowledge assistants at KPMG. The platform's intuitive interface for configuring RAG pipelines allows us to leverage cutting-edge techniques while maintaining consistency. Its ability to handle multi-modal data embedded within documents during both ingestion and retrieval has been valuable in unlocking insights from our diverse enterprise corpora. LlamaCloud is a foundational capability in our AI & Data Center of Excellence, supporting our efforts to drive systematic AI adoption in a trusted manner.

LlamaCloud’s ability to efficiently parse and index our complex enterprise data has significantly bolstered RAG performance. Prior to LlamaCloud, multiple engineers needed to work on maintenance of data pipelines, but now our engineers can focus on the development and adoption of LLM applications.