How agentic OCR and LLM-powered workflows transform automation and accuracy.

The Landscape: Why Document AI Matters

Every organization runs on documents: invoices, contracts, reports, receipts, and compliance forms. For years, these were handled by a patchwork of systems like OCR (optical character recognition), IDP (intelligent document processing), and RPA (robotic process automation).

Traditional systems work well on predictable layouts but struggle with real-world complexity. Layout changes, images, tables, charts, or handwritten notes often break automated pipelines and force manual review. Businesses spend large sums maintaining templates and retraining models for each new document style.

Document AI represents a new category of automation. Powered by large language models (LLMs), it doesn’t just extract text, it understands meaning, reasons through context, and takes action. Instead of isolated components, Document AI acts as a connected intelligence layer that reads, reasons, and executes.

From OCR + RPA to Agentic Systems

Legacy document systems follow a rigid sequence: extract, classify, then act. They can only automate what they are explicitly trained to recognize.

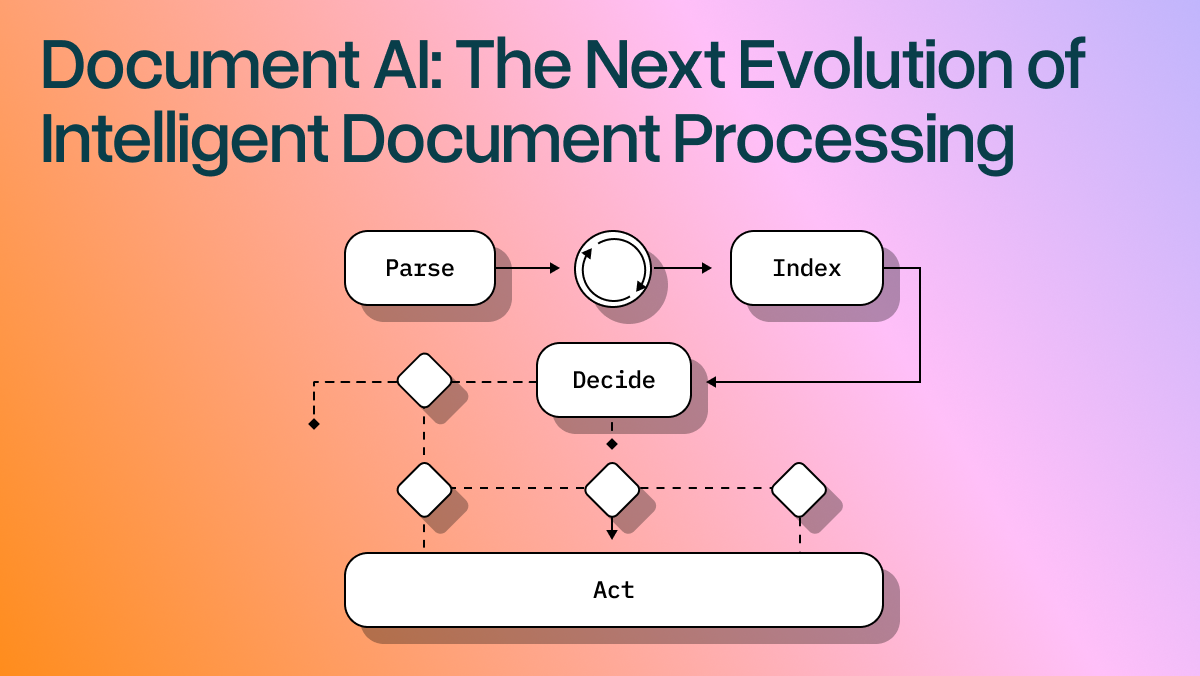

Document AI replaces this with agentic systems that reason across the entire process. These systems combine two core capabilities:

- Agentic OCR: Vision-language models that interpret visual and semantic structure rather than pixels.

- Agentic Workflows: LLM-based reasoning pipelines that coordinate multi-step logic, error recovery, and decision-making.

Together, these form the basis of more generalized, and complex automation over traditional document workflows often called document AI, a term coined to describe a new generation of intelligent, self-improving document systems.

Agentic OCR: Reading with Comprehension

Advanced Layout Detection and Correction Loops

Traditional OCR operates like a camera. It can see words on a page but not the relationships between them. Agentic OCR acts more like a reader: it perceives layout, understands semantics, and adapts to new document formats without retraining.

When a new invoice arrives, a traditional OCR engine depends on layout templates to find fields like the vendor name or total amount, and if the layout changes, accuracy drops—often leaving you with a noisy text dump where you must rely on heuristics like searching for “Total” and grabbing the next amount-like token or scanning for patterns that resemble an address. Agentic OCR, powered by multimodal large vision-language models (VLMs), avoids these brittle approaches by reasoning through structure dynamically: it understands page layout and reading order, identifies tables, infers headers, interprets figures, and can even extract meaning from charts or embedded images. In other words, it “reads” documents the way humans do, contextually and flexibly,far surpassing the legacy template-driven methods many older tools were built around.

This contextual understanding dramatically improves pass-through rates, the percentage of documents processed end to end without manual review. Legacy OCR pipelines often plateau around 60–70 percent automation because they break under layout variance. Agentic OCR can push pass-through rates beyond 90 percent by generalizing across unseen document types and reasoning through structural noise.

Error handling also improves significantly. Instead of failing silently or producing garbled results, an agentic OCR system self-evaluates. It can flag uncertain fields, ask clarifying questions through an agent loop, or retry parsing with adjusted parameters. The result is a more resilient pipeline that not only extracts information accurately but also knows when it might be wrong, and self correct.

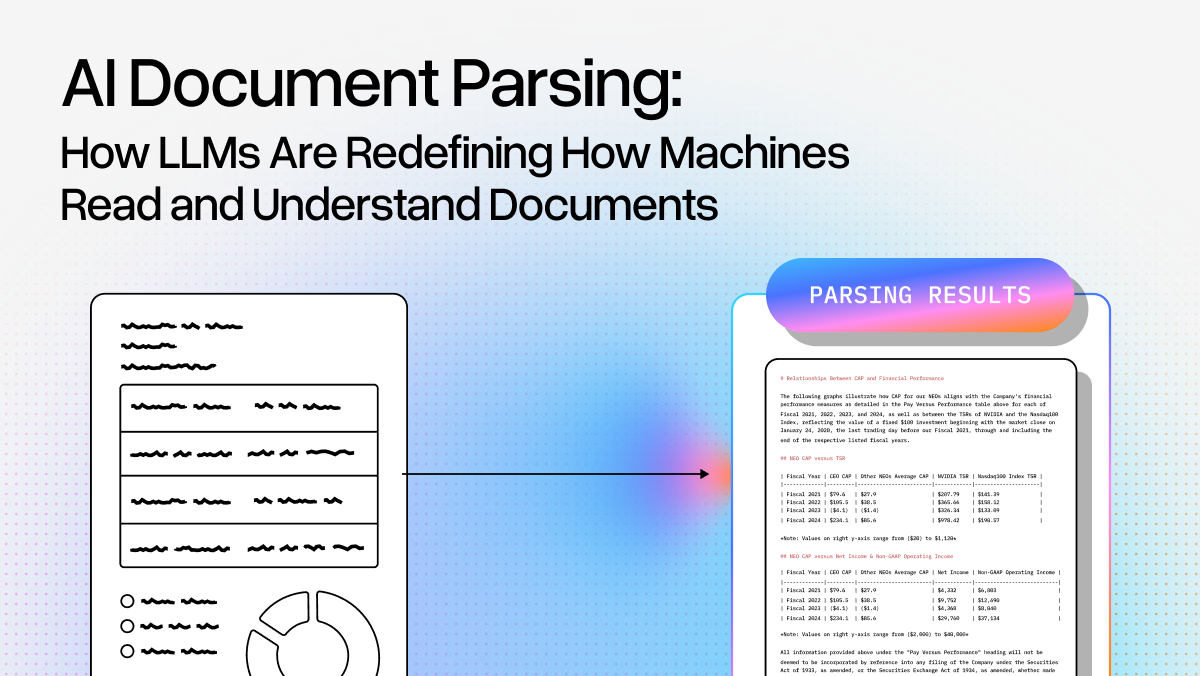

Multimodality

Traditional documents contain much more than text. They include charts, images, tables, and other embedded visuals that conventional OCR systems simply cannot interpret. By combining visual understanding with the reasoning capabilities of large language models (LLMs), agentic OCR can process these multimodal sections seamlessly. This matters most in complex materials such as financial statements or earnings reports, where much of the real insight lives in charts, diagrams, and other visual structures that demand contextual interpretation rather than surface-level extraction.

Agent Workflows: From Rules to Reasoning

Traditional RPA scripts follow fixed “if-then” rules. If a document deviates from expectations or is misclassified, the process fails.

Agentic workflows integrate LLM reasoning to plan and adapt dynamically. If an invoice lacks a vendor name, an agentic workflow might search the header and infer the correct match. It uses logic and retrieval to fill gaps rather than stopping execution.

These workflows maintain state across steps and use memory to recall past actions. They invoke tools for validation, calculations, or lookups when needed. When an error occurs, the agent analyzes why it failed and retries intelligently. For instance, if totals do not reconcile, it can recheck tables, adjust parsing, or escalate to human review with a structured explanation.

This reasoning-first approach allows workflows to achieve higher automation without losing accuracy. Pass-through rates rise not because the system ignores errors, but because it handles them intelligently.

Measuring Success: Pass-Through and Error Handling

Two metrics define the effectiveness of Document AI systems: pass-through rate and error recovery efficiency.

Pass-through rate reflects how many documents are processed automatically end to end. In traditional IDP systems, every new format reduces this rate because retraining and template updates are required. Agentic OCR and LLM reasoning remove that bottleneck by generalizing beyond training data.

Error handling efficiency measures how the system responds when issues arise. Legacy systems usually stop at the first failure, while agentic systems attempt corrections, request clarification, and record learnings. Over time, this leads to compounding improvement as the workflow learns from every exception.

Organizations adopting Document AI report lower exception volumes, faster turnaround, and fewer downstream errors. The result is not only faster document handling but smarter automation that continually improves.

How Document AI Operates

Document AI typically includes four stages that work in concert:

- Ingestion and Parsing – Documents are imported, raw data are extracted, common corruptions and errors are fixed.

- Understanding and Extraction – Agentic OCR interprets layout, identifies entities, and converts information into structured data.

- Reasoning and Validation – LLM agents apply logic to verify totals, match entities, and resolve inconsistencies.

- Action and Learning – The agent completes downstream actions such as posting data to an ERP system, then logs its reasoning for traceability and model improvement.

Frameworks like LlamaIndex provide the tools to build these intelligent workflows, handling state, retrieval, and orchestration so developers can focus on reasoning quality and workflow design.

Business Impact and Measurable Gains

The impact of Document AI is already visible across industries. Enterprises using agentic systems achieve:

- Higher throughput: More documents processed without manual review.

- Lower operational cost: Reduced need for rule updates and retraining.

- Faster deployment: Workflows adapt to new document types immediately.

- Improved compliance: Detailed reasoning logs enable full auditability.Increased accuracy: Contextual reasoning reduces false extractions.

For example, an accounts payable team using traditional IDP might manually handle 30 percent of invoices. With Document AI, that manual load can drop to under 10 percent while maintaining accuracy above 95 percent.

Building with LlamaIndex

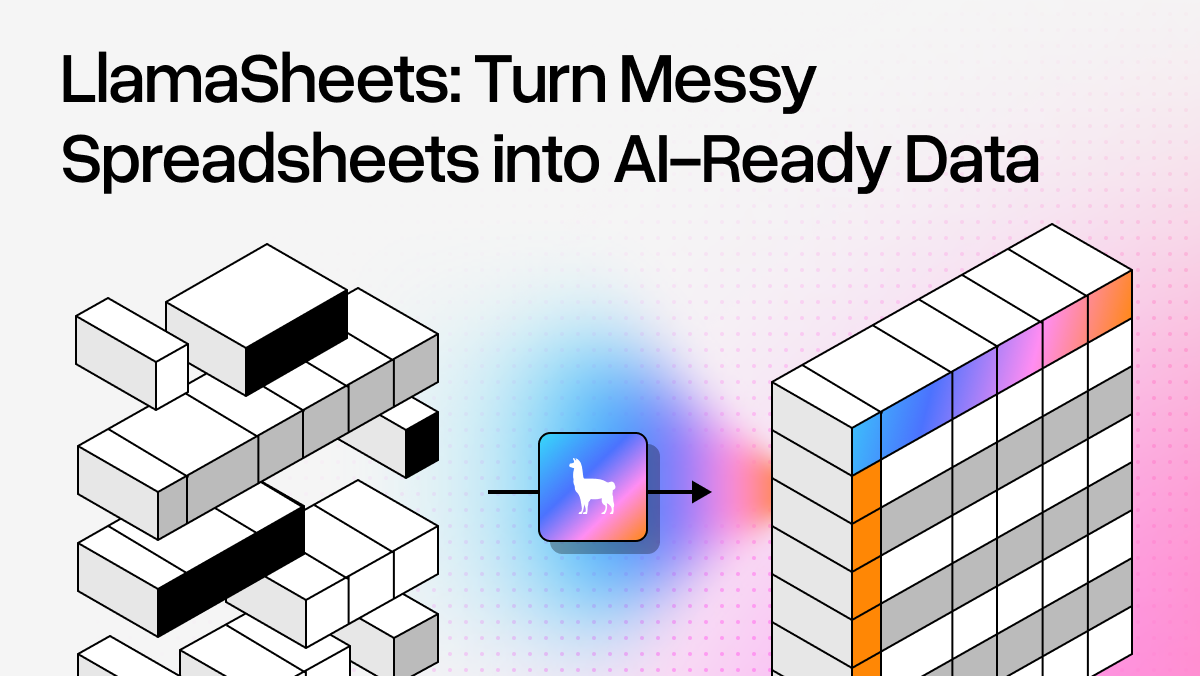

LlamaIndex powers the modern Document AI stack by combining SOTA agentic OCR and agentic orchestration and deployment in one cohesive platform.

At the core is LlamaCloud, which unifies the essential components for building and deploying intelligent document systems. LlamaParse converts documents (PDFS, Office, etc.), scans, faxes, and images into structured, layout-aware text that preserves context for downstream reasoning. LlamaExtract turns that structure into usable data through declarative extraction schemas, while Index stores and retrieves this information for contextual grounding and RAG-style reasoning.

Developers can orchestrate these steps using the LlamaIndex Workflows framework, creating multi-step, stateful pipelines that handle parsing, validation, and action with built-in memory and error recovery. The new LlamaAgents layer makes it even simpler to deploy complete document agents using templates for common tasks like invoice processing or contract analysis, all hosted and served through LlamaCloud.

Together, these components give builders a full-stack framework for Document AI, systems that can read, reason, and act across enterprise-scale document collections with accuracy and autonomy.

The Future of Document AI

As multimodal LLMs advance, Document AI will progress from document understanding to autonomous decision-making. Agents will cross-reference documents, reconcile data, and draft reports or communications independently.

Future systems will combine perception, reasoning, and execution in a single agentic loop, improving continuously from human feedback. Error handling will become proactive, and pass-through rates will approach full automation for many document types.

This is not just the next phase of OCR, it is the foundation of document AI or autonomous document intelligence.