From OCR to agentic parsing, the evolution of document intelligence is reshaping how machines see, read, and reason.

The Role of Documents in the Modern Enterprise

Documents are where critical knowledge lives: contracts, financial statements, invoices, research papers, product specs, compliance filings. They capture the operational DNA of every organization. Yet, most of this information remains trapped in unstructured formats such as PDFs, scanned forms, and images.

Unlocking that information has always been a challenge. Over the last two decades, a progression of technologies, from OCR (Optical Character Recognition) to NLP (Natural Language Processing ) to NER (Named Entity Recognition), has tried to make sense of documents. Each contributed something essential, but none achieved human-level understanding.

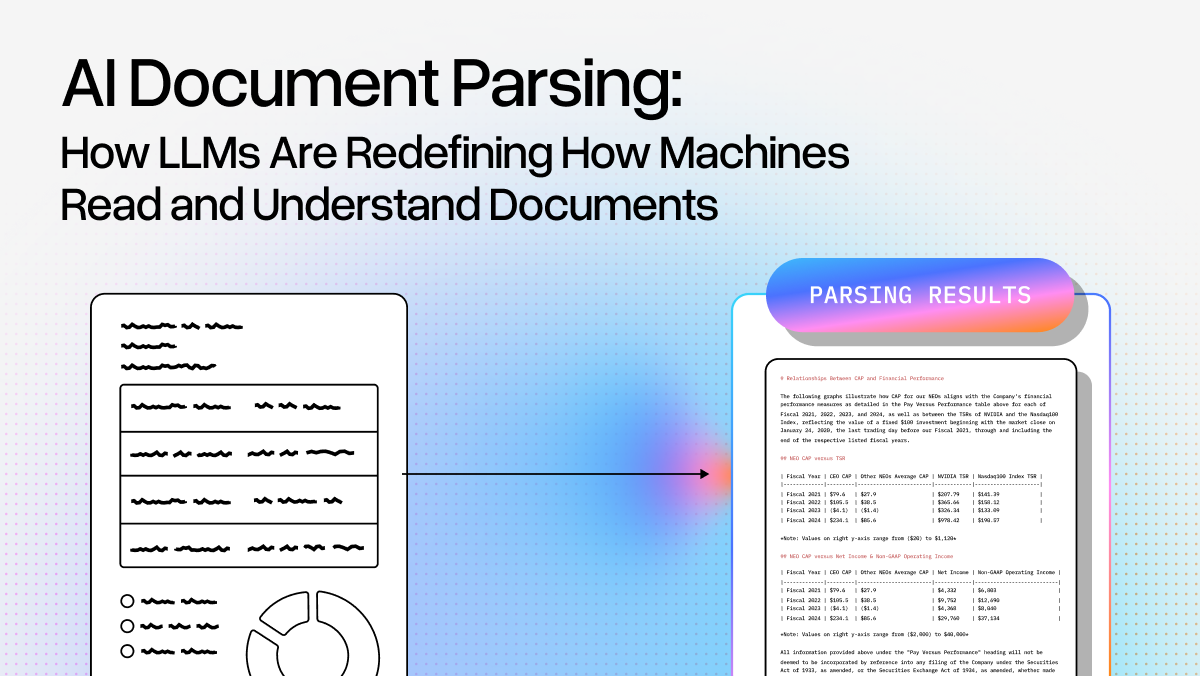

The next leap is here: AI document parsing powered by large language models (LLMs). This new approach allows systems to “read” documents more like people do, understanding layout, semantics, and context across text, tables, and visuals.

The Traditional Stack: OCR, NLP, and NER

Before the arrival of LLMs, document understanding relied on a collection of narrow, task-specific tools. These components powered the first wave of Intelligent Document Processing (IDP) systems and enabled early automation for predictable, template-driven documents such as forms, invoices, and receipts. They worked, but only when the document looked exactly as expected.

- At the core of these systems was Optical Character Recognition (OCR), which converted images into text. OCR was highly effective at digitizing printed content but could not understand layout, relationships, or meaning. It saw characters, not context, and it could not interpret tables, charts, or embedded images.

- Once OCR produced plain text, Natural Language Processing (NLP) techniques added basic linguistic structure. Tokenization, syntax parsing, and sentiment analysis helped organize text, but only after the document had been flattened into a linear string that ignored spatial relationships.

- Named Entity Recognition (NER) introduced structured extraction, identifying entities such as names, dates, addresses, and currency amounts. This allowed systems to populate fields in downstream workflows, but only for predefined entity types and predictable formats.

Together, these tools enabled functional but fragile automation. Each new document type required extensive rule writing, custom model training, and a steady stream of annotated examples. The resulting pipelines were brittle and hard to maintain. They struggled with small layout changes, required frequent retraining, and produced low pass through rates that eroded user trust. Human operators ended up performing significant manual review and error handling, limiting scalability and slowing time to deployment.

In short, traditional systems could extract text, but they could not understand the relationships between words, paragraphs, tables, and visual elements. They lacked the generalization and reasoning capabilities needed for real world document variability.

The Step Change: LLM Powered Document Parsing

LLMs introduced a meaningful shift in how documents can be interpreted. Earlier OCR and NLP pipelines relied on templates, rules, and custom trained models to extract structure from documents. They worked, but only when formats were predictable and training data was abundant.

LLMs changed this by offering zero shot semantic understanding across both text and images. They can infer structure, meaning, and relationships directly from raw content, without template tuning or domain specific training.

Zero Shot Semantic Layout Reconstruction

Traditional layout analyzers could attempt reading order inference but struggled with real world complexity such as multi column pages, irregular tables, or mixed text and image regions. They depended heavily on heuristics.

LLMs can reconstruct semantic reading order from text alone, identifying which labels pair with which values, how sections relate, and how information should be grouped. Their strength comes from semantic coherence rather than geometry, allowing them to organize imperfect or flattened text into meaningful structure.

Deep Multimodal Understanding Out of the Box

Traditional systems required specialized vision models to interpret charts, tables, diagrams, or embedded images. These often needed training and were brittle to layout changes.

Modern multimodal LLMs understand visual elements with no task specific training. By processing the full page image, they can interpret charts, recognize table structures, read visual cues, and connect figures to captions. This enables rich understanding of complex documents like financial filings or technical reports.

As a result, LLMs handle new document types without retraining, leading to significantly higher pass through rates. Traditional IDP systems often broke when formats changed. LLM based parsing is far more adaptable because it relies on general semantic understanding rather than brittle rules or templates.

The Limits of Raw LLM APIs for Document Parsing

While LLMs bring major advances in document understanding, they are not a silver bullet. Relying solely on standard LLM APIs for parsing complex documents introduces several serious limitations.

Accuracy and Complex Layouts

Frontier models are impressive at many tasks, but they continue to struggle when faced with highly structured or densely formatted documents. For example, when parsing image-rich pages, embedded charts, merged cell tables or small-font embedded metadata, screenshot-only approaches still fail or hallucinate values. Even advanced vision-language models can drop subtle content when document pages are large or resolution is reduced.

Missing Enterprise Metadata

Raw LLM APIs often return simple text or basic JSON. In production document workflows you often need more than that: confidence scores, bounding boxes for each field, provenance (which page/region the text came from), and full audit logs. Without these, it is very hard to build reliable pipelines, trigger human-in-loop review when needed, or monitor performance over time.

High Maintenance and Prompt Engineering

Using an LLM API to parse documents often means heavy context and prompt engineering: you need to craft templates for each document type, handle exceptions, and maintain a library of prompts as document formats evolve. In effect you end up building a parser anyway — repeating many of the same burdens that legacy systems incurred.

Operational and Cost Challenges

LLM APIs can impose rate limits, unpredictable latency, and high per-page costs. At scale, these factors make them fragile for large‐volume document workflows. For enterprise-grade parsing you need consistent throughput, low latency, predictable cost, and operational resilience, things raw LLM APIs alone do not guarantee.

Agent Engineering: Bringing Intelligence to the Parsing Process

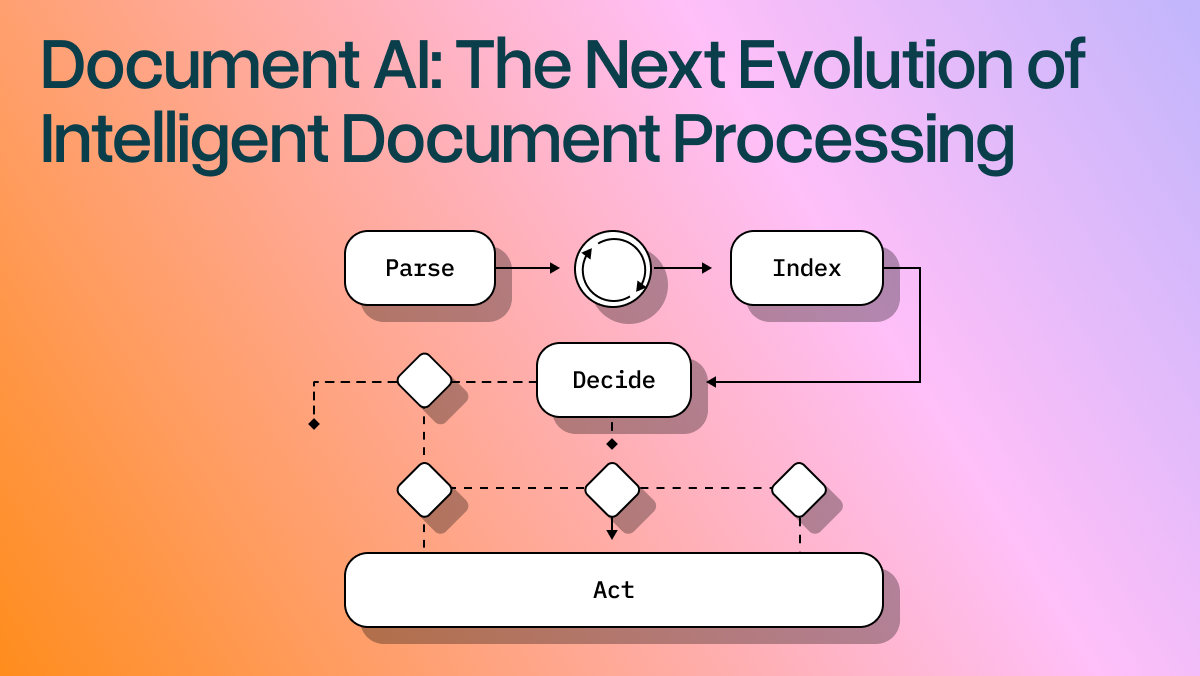

The next evolution of AI document parsing is agent engineering, combining LLM reasoning with modular, orchestrated components that can plan, reflect, and correct themselves.

An agentic parser operates in loops rather than just parsing once and stopping. It can:

- Mix techniques: Use OCR, LLM reasoning, retrieval, and structured extractors together.

- Run correction loops: Detect anomalies, compare against schema constraints, and re-query uncertain sections.

- Reflect and evaluate: Generate internal confidence scores and re-evaluate low-confidence outputs before passing results forward.

- Add metadata and citations: Link every extracted element back to its source region in the document for explainability and auditability.

This agentic approach moves document parsing from a single-shot task to a reasoning workflow, capable of self-improvement and verifiable output.

At LlamaIndex, for example, developers can implement this architecture with LlamaParse for agentic document parsing, LlamaExtract for information extraction agents, and Agent Workflows for building end-to-end automation when it comes to document workflows.

The Future of AI Document Parsing

AI document parsing is quickly evolving from text extraction to document intelligence, systems that not only read but also reason, summarize, and act.

In the near future, we’ll see:

- Fully multimodal parsing: Unified models that process text, images, tables, and diagrams seamlessly.

- End-to-end document agents: Parsers that validate their own results, connect to knowledge bases, and trigger downstream workflows automatically.

- Continuous learning loops: Systems that refine extraction through feedback and reflection without manual retraining.Explainable outputs: Every piece of extracted data will include provenance, showing exactly where it came from in the source document.

Ultimately, document parsing is becoming the bridge between unstructured knowledge and structured action. It’s no longer about digitizing paper; it’s about transforming documents into living, machine-readable intelligence.