DeepSeek recently released a new paper and model that presents a shift in how we think about documents and context. The model name "DeepSeek-OCR" might imply improvements in reading text from images, but their approach also presents innovation and exploration around using vision as a compression algorithm for textual information.

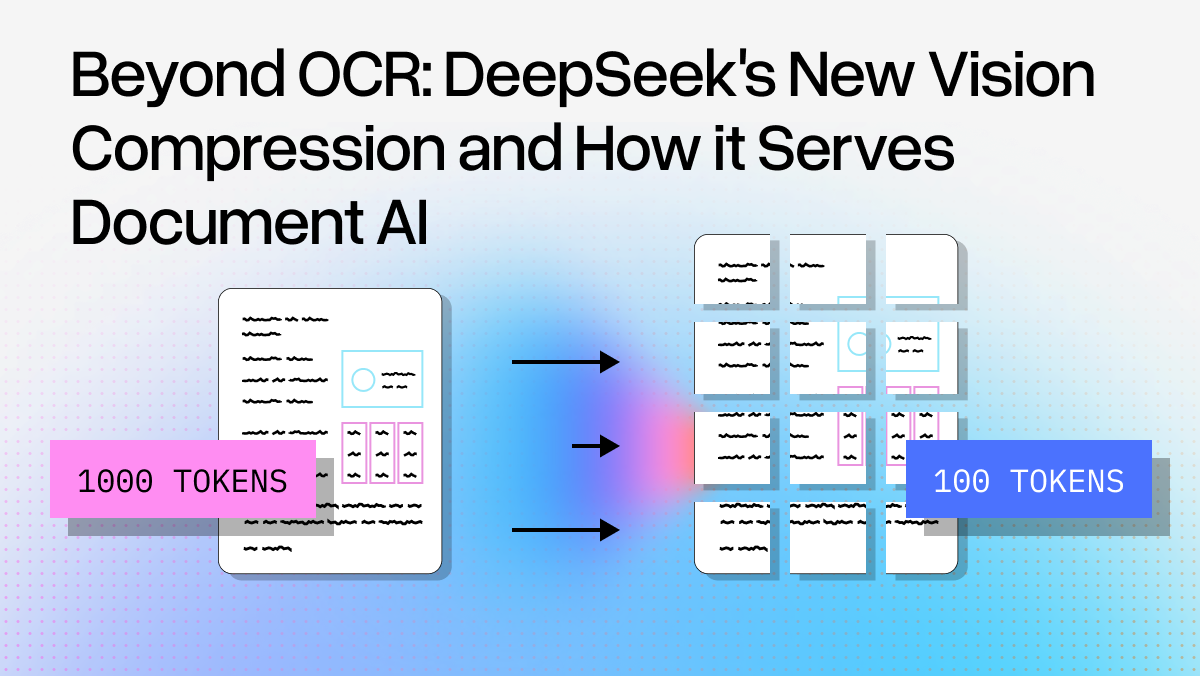

They found that they can compress 1,000 text tokens down to just 100 vision tokens and decode them back with 97% accuracy. At 10× compression, accuracy remains above 90%.

Getting the best results from LLMs and AI agents depends on providing relevant context efficiently, and context engineering your way to success. Context windows have limits, and every token counts. DeepSeek-OCR suggests that we might be thinking about this problem entirely wrong.

In this article, we explain what DeepSeek-OCR really is, what problems it solves, and what this means for document parsing tools like LlamaParse. Because the answer isn't as simple as "compression replaces parsing" or "they work together perfectly."

ℹ️ While reading up on DeepSeek-OCR, we found the video by Sam Witteveen - DeepSeek OCR - More than OCR - incredibly insightful. Shoutout to him for helping make sense of the paper.

What DeepSeek-OCR Actually Is

When DeepSeek released their "OCR" model, the name arguably undersold what they'd built. They built an optimized a vision-text compression system that treats images as compact representations of textual information.

✨ Insight #1: Instead of converting documents to text tokens (expensive), what if we kept them as compressed visual representations (cheap)?

How It Works

DeepSeek's DeepEncoder uses a two-stage architecture:

Stage 1: High-Resolution Perception A SAM model (80M parameters) processes the document with window attention, capturing fine text details at high resolution without exploding memory usage.

Stage 2: Intelligent Compression A 16× convolutional compressor reduces tokens, then a CLIP model (300M parameters) applies global attention to understand relationships across the entire document.

Compression at Multiple Resolutions

They offer different "zoom levels" depending on your needs:

- Tiny mode: 64 tokens per page (20× compression)

- Small mode: 100 tokens per page (10× compression)

- Base mode: 256 tokens per page (~4× compression)

- Large mode: 400 tokens per page (~2.5× compression)

- Gundam mode: Dynamic (400-1,800 tokens for complex layouts)

For comparison: a typical VLM like Qwen2.5-VL uses 3,949 tokens per page. InternVL3 uses 6,790. DeepSeek-OCR's Base mode achieves competitive accuracy with just 256 tokens—a 15-26× reduction.

✨ Insight #2: As exciting as this new model is, it’s important to note limits. At 20× compression, the model maintains ~60% accuracy.

This implies there is a “compression limit” to how much information can be retained from an input using today’s models and approaches. The paper uses 1,000 tokens for their 10x example, which if you’ve been developing in the GenAI space, you know that 1,000 tokens is much smaller than existing LLM context windows.

The Vision Memory Paradigm

Their paper proposes an intriguing application of this compression architecture: keep recent context as high-fidelity text tokens, but compress older context into progressively lower-resolution visual representations. Like human memory—recent conversations stay sharp, older ones fade but remain accessible.

The Traditional Parsing Problem

Before we dive into what this changes, let's establish why document parsing exists in the first place.

Most valuable information lives in formats LLMs can't natively consume: PDFs with complex layouts, tables spanning pages, documents mixing charts and text. You need to translate these into something an LLM understands: clean text, markdown, structured JSON, layout information. Other downstream applications beyond LLMs also benefit from structured outputs like this.

Existing Approaches and Their Limitations

Pure OCR Approach:

- No visual understanding of what it “reads” (just character extraction)

- Rigid heuristics that don’t generalize to varied inputs

- Lacks semantic structure preservation

Vision-Language Models (VLMs):

- Can see and understand simultaneously

- However, there’s a need to tune the prompts to make sure it’s able to generalize

- Encounters hallucination issues on pages with a lot of text, or repeating characters

- Uses thousands of vision tokens per document

Real-world documents like financial reports with long nested tables, technical manuals with diagrams, legal contracts with intricate formatting, expose these limitations and the pains around them.

How LlamaParse Solves This Today

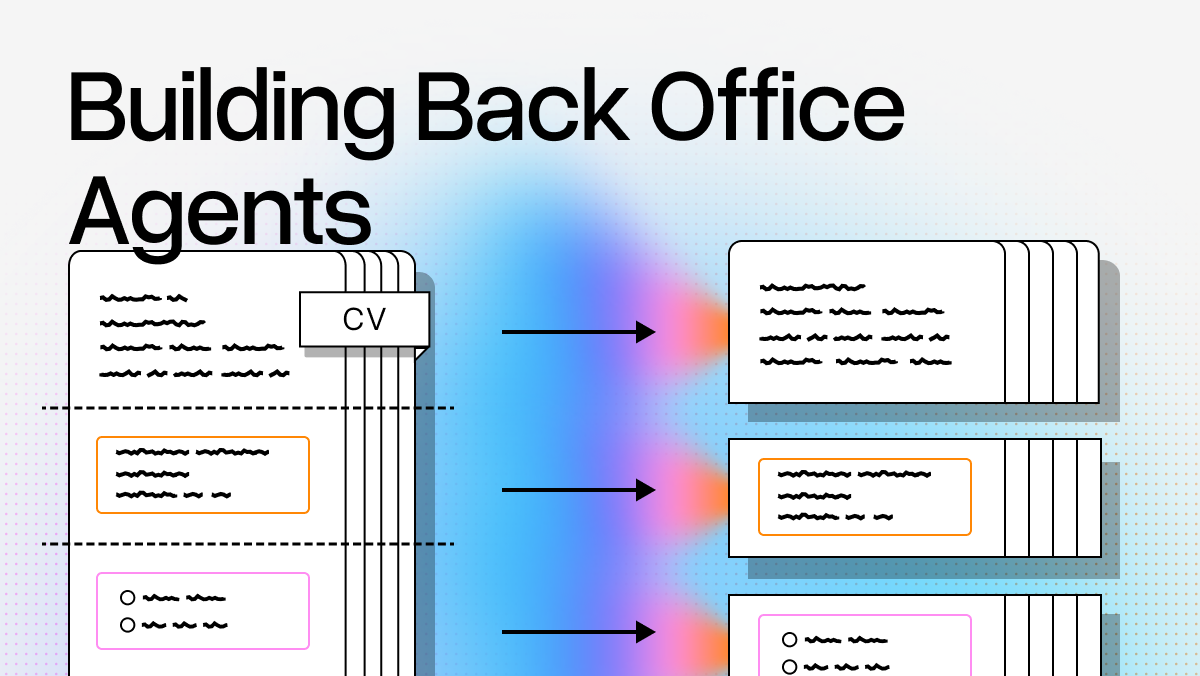

At LlamaIndex, parsing is central to what we do. LlamaParse takes a multi-agent approach, combining LLM intelligence, VLMs, and OCR: essentially running specialized agents that work together:

- Vision Agent: Identifies layout elements (tables, headers, columns)

- OCR Agent: Extracts text with high precision

- Structure Agent: Builds semantic relationships ("this table belongs to Section 2.3")

- LLM Agent: Understands context and meaning

- Synthesis Agent: Outputs clean, structured results (markdown, JSON, etc.)

Think of it as a team of specialists examining your document from different angles, cross-checking each other's work to minimize errors.

Crucially, LlamaParse produces structured output: tables as JSON, semantic hierarchies, and layout elements. This structured representation is what makes downstream AI applications possible.

The Future of Document Parsing and Memory

With the introduction of models like DeepSeek-OCR, here are two possible futures we can image as the technology matures.

Future 1: “Parsing” Becomes a Form of Compression

For many applications (particularly retrieval-augmented generation (RAG), document Q&A, and summarization) DeepSeek-OCR suggests you might not need what we currently call “parsing” at all.

You could imagine a workflow like:

- Render PDFs as images (at appropriate resolution)

- Encode with DeepSeek-OCR-style compression

- Store compressed representations in vector database (ColPali, anyone?)

- Query directly against visual encodings

- Let the VLM decode relevant sections on-demand

This is the future where “parsing” becomes something fundamentally different. Visual compression handles document ingestion end-to-end. However, one stipulation is this future only exists if:

- These type of vision models can scale to modern context window sizes. 1,000 text tokens of compression isn’t going to cut it for most document applications.

- Your use-case does not require accessing the actual contents or structure from the original file

Future 2: Parsing + Compression

Not all applications just need understanding. If you're building systems that require:

- Structured data extraction (tables as JSON/Markdown/SQL for databases)

- Semantic hierarchies (section trees, document structure)

- Cross-references (linking citations, footnotes, appendices)

- Precise text manipulation (redaction, editing, compliance checking)

...then you still need parsing. Because compressed visual representations don't give you machine-readable structure, just efficient understanding.

However, the compression aspect from DeepSeek-OCR can still accelerate parsing. For example:

- Supply extracted text (if any) and images to an “DeekSeek-OCR like LLM” for processing

- Take advantage of the compression factor: when parsing long documents, you can swap text inputs for image inputs, while maintaining context of the overall document

- Store structured data for applications that need it

- Use visual compression for efficient retrieval/context

- Pull structured data when precision is required

This is the future where parsing and compression are complementary: where you need both, but for different reasons. The compression can save tokens while also enabling better parsing of long documents.

What This Means for LlamaParse

The honest truth is that we think the the most likely path forward for LlamaParse and similar technologies will see their stack incorporate DeepSeek-OCR-like technology. But, it will boil down to what you (the users of said tools) are building.

If you're building RAG systems where understanding is sufficient, DeepSeek-OCR-style compression and models like ColPali could handle 80% of use cases more efficiently than traditional parsing, assuming that the underlying technology continues to scale.

But if you're building applications that need structured data (databases, hierarchies, compliance systems, precise extraction) classic parsing will remain essential. Compression gives you understanding; parsing gives you structure. If anything, models like DeepSeek-OCR will improve existing agentic OCR pipelines like LlamaParse in the future.

At the end of the day, our goal is to provide the most flexible and accurate document parsing system.

Resources to Learn More

For those wanting to dive deeper into DeepSeek-OCR's approach:

- DeepSeek-OCR Paper - The original technical paper "DeepSeek-OCR: Contexts Optical Compression" with full technical details, benchmarks, and methodology

- DeepSeek-OCR Model - The released model on Hugging Face

- Video Explanation by Sam Witteveen - An excellent breakdown of why this is about compression rather than traditional OCR

- LlamaParse Documentation - Learn more about our approach to intelligent document parsing

Have thoughts on how compression and parsing should evolve together? We'd love to hear from you. Join the discussion in our Discord community or reach out on Twitter/X.