We're thrilled to announce a new feature in LlamaIndex that expands our knowledge graph capabilities to be more flexible, extendible, and robust. Introducing the Property Graph Index!

Why Property Graphs?

Traditional knowledge graph representations like knowledge triples (subject, predicate, object) are limited in expressiveness. They lack the ability to:

- Assign labels and properties to nodes and relationships

- Represent text nodes as vector embeddings

- Perform both vector and symbolic retrieval

Our existing KnowledgeGraphIndex was burdened with these limitations, as well as general limitations on the architecture of the index itself.

The Property Graph Index solves these issues. By using a labeled property graph representation, it enables far richer modeling, storage and querying of your knowledge graph.

With Property Graphs, you can:

- Categorize nodes and relationships into types with associated metadata

- Treat your graph as a superset of a vector database for hybrid search

- Express complex queries using the Cypher graph query language

This makes Property Graphs a powerful and flexible choice for building knowledge graphs with LLMs.

Constructing Your Graph

The Property Graph Index offers several ways to extract a knowledge graph from your data, and you can combine as many as you want:

1. Schema-Guided Extraction: Define allowed entity types, relationship types, and their connections in a schema. The LLM will only extract graph data that conforms to this schema.

python

from llama_index.indices.property_graph import SchemaLLMPathExtractor

entities = Literal["PERSON", "PLACE", "THING"]

relations = Literal["PART_OF", "HAS", "IS_A"]

schema = {

"PERSON": ["PART_OF", "HAS", "IS_A"],

"PLACE": ["PART_OF", "HAS"],

"THING": ["IS_A"],

}

kg_extractor = SchemaLLMPathExtractor(

llm=llm,

possible_entities=entities,

possible_relations=relations,

kg_validation_schema=schema,

strict=True, # if false, allows values outside of spec

) 2. Implicit Extraction: Use LlamaIndex constructs to specify relationships between nodes in your data. The graph will be built based on the node.relationships attribute. For example, when running a document through a node parser, the PREVIOUS , NEXT and SOURCE relationships will be captured.

python

from llama_index.core.indices.property_graph import ImplicitPathExtractor

kg_extractor = ImplicitPathExtractor() 3. Free-Form Extraction: Let the LLM infer the entities, relationship types and schema directly from your data in a free-form manner. (This is similar to how the KnowledgeGraphIndex works today.)

python

from llama_index.core.indices.property_graph import SimpleLLMPathExtractor

kg_extractor = SimpleLLMPathExtractor(llm=llm)Mix and match these extraction approaches for fine-grained control over your graph structure.

python

from llama_index.core import PropertyGraphIndex

index = PropertyGraphIndex.from_documents(docs, kg_extractors=[...])Embeddings

By default, all graph nodes are embedded. While some graph databases support embeddings natively, you can also specify and use any vector store from LlamaIndex on top of your graph database.

python

index = PropertyGraphIndex(..., vector_store=vector_store)Querying Your Graph

The Property Graph Index supports a wide variety of querying techniques that can be combined and run concurrently.

1. Keyword/Synonym-Based Retrieval: Expand your query into relevant keywords and synonyms and find matching nodes.

python

from llama_index.core.indices.property_graph import LLMSynonymRetriever

sub_retriever = LLMSynonymRetriever(index.property_graph_store, llm=llm)2. Vector Similarity: Retrieve nodes based on the similarity of their vector representations to your query.

python

from llama_index.core.indices.property_graph import VectorContextRetriever

sub_retriever = VectorContextRetriever(

index.property_graph_store,

vector_store=index.vector_store,

embed_model=embed_model,

)3. Cypher Queries: Use the expressive Cypher graph query language to specify complex graph patterns and traverse multiple relationships.

python

from llama_index.core.indices.property_graph import CypherTemplateRetriever

from llama_index.core.bridge.pydantic import BaseModel, Field

class Params(BaseModel):

“””Parameters for a cypher query.”””

names: list[str] = Field(description=”A list of possible entity names or keywords related to the query.”)

cypher_query = """

MATCH (c:Chunk)-[:MENTIONS]->(o)

WHERE o.name IN $names

RETURN c.text, o.name, o.label;

"""

sub_retriever = CypherTemplateRetriever(

index.property_graph_store,

Params,

cypher_query,

llm=llm,

)Instead of providing a template, you can also let the LLM write the entire cypher query based on context from the query and database:

python

from llama_index.core.indices.property_graph import TextToCypherRetriever

sub_retriever = TextToCypherRetriever(index.property_graph_store, llm=llm)4. Custom Graph Traversal: Define your own graph traversal logic by subclassing key retriever components.

These retrievers can be combined and composed for hybrid search that leverages both the graph structure and vector representations of nodes.

python

from llama_index.indices.property_graph import VectorContextRetriever, LLMSynonymRetriever

vector_retriever = VectorContextRetriever(index.property_graph_store, embed_model=embed_model)

synonym_retriever = LLMSynonymRetriever(index.property_graph_store, llm=llm)

retriever = index.as_retriever(sub_retrievers=[vector_retriever, synonym_retriever])Using the Property Graph Store

Under the hood, the Property Graph Index uses a PropertyGraphStore abstraction to store and retrieve graph data. You can also use this store directly for lower-level control.

The store supports:

- Inserting and updating nodes, relationships and properties

- Querying nodes by ID or properties

- Retrieving relationship paths from a starting node

- Executing Cypher queries (if the backing store supports it)

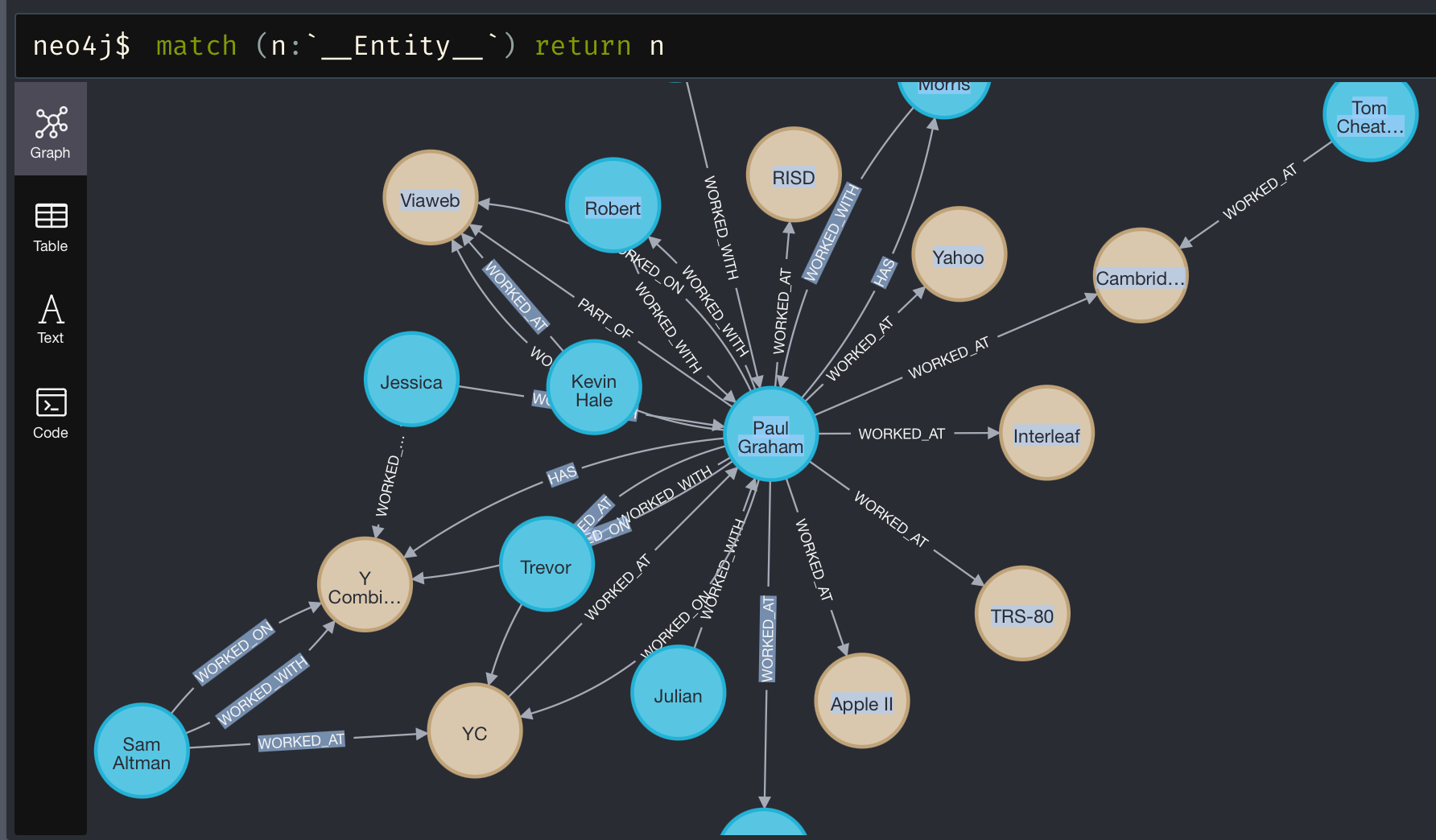

python

from llama_index.graph_stores.neo4j import Neo4jPGStore

graph_store = Neo4jPGStore(

username="neo4j",

password="password",

url="bolt://localhost:7687",

)

# insert nodes

nodes = [

EntityNode(name="llama", label="ANIMAL", properties={"key": "value"}),

EntityNode(name="index", label="THING", properties={"key": "value"}),

]

graph_store.upsert_nodes(nodes)

# insert relationships

relations = [

Relation(

label="HAS",

source_id=nodes[0].id,

target_id=nodes[1].id,

)

]

graph_store.upsert_relations(relations)

# query nodes

llama_node = graph_store.get(properties={"name": "llama"})[0]

# get relationship paths

paths = graph_store.get_rel_map([llama_node], depth=1)

# run Cypher query

results = graph_store.structured_query("MATCH (n) RETURN n LIMIT 10") Several backing stores are supported, including in-memory, disk-based, and Neo4j.

Learn More

- Property Graph Index Overview

- Basic Usage Notebook

- Advanced Usage with Neo4j

- Using the Property Graph Store Directly

A huge thanks to our partners at Neo4j for their collaboration on this launch, especially Tomaz Bratanic for the detailed integration guide and design guidance.

We can't wait to see what you build with the new Property Graph Index! As always, feel free to join our Discord to share your projects, ask questions, and get support from the community.

Happy building!

The LlamaIndex Team